A lot of folks have been chatting about ChatGPT (that’ll be the last dad joke, I swear…), the latest experiment out of openai.com — home of the DALL-E image generation AI. I’ve been generally pretty impressed with what I can get from DALL-E — enough to purchase some credits to help generate art for my ongoing Dungeons & Dragons campaign — but there are times when I need to massage the results a bit. I have also followed Janelle Shane’s awesome AI Weirdness blog for a long time, and read her book You Look Like a Thing and I Love You, so I’m pretty familiar with what AI and machine learning is good at and where it typically fails. What was once something I paid attention to because it was funny (ha ha, machine thing go durr durr durr), machine learning and AI has evolved into a full blown interest for me.

I was a little late to the ChatGPT party, but I finally joined this week and have both played with it casually and gotten some arguably decent results out of it (with some caveats). I have some thoughts.

ChatGPT is really, really bad

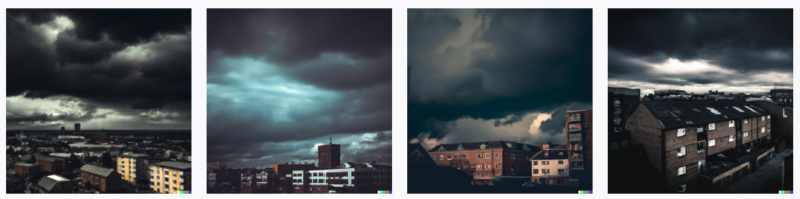

My first attempts at getting ChatGPT to do something vaguely useful was to try to convince it to generate DALL-E image generation prompts. Because here’s the thing with DALL-E: since these models are trained on the internet, there’s a lot of weird, random shit that makes them go that really shouldn’t. For example, if I ask DALL-E to give me a dark, moody modern urban landscape scene in muted tones with dark stormclouds overhead, I get something like this:

Arguably, this is what I asked for. It reminds me of photographs of Russia or some European cities on a particularly dreary day. However, if I instead give it dark, moody modern urban landscape scene in muted tones with dark stormclouds overhead dramatic trending on artstation, it gives me something like this:

Holy crap! That’s a big difference! Not only does this version evoke much more of the dark, moody feeling I was going for, but the whole perspective changed — rather than being a skyline view of some multi-tenant housing or office buildings, you are at street level — there’s definitely a stronger feeling of being inside the image.

Now, there are arguments that how DALL-E gets from point A (fairly representational image that generally matches the requirements I gave it) and point B (an impressionistic interpretation with more artistic flair) is by stealing ideas from artists on the internet. Surely the “trending on artstation” part implies that it’s going to source ideas from whatever it can find that was, presumably, at one point in time, trending on Artstation.com. I’m not going to debate the morality of computers stealing ideas from human artists. (FWIW, I believe that artists should be compensated fairly for their work, but I simultaneously believe that all art is derivative and the art that a computer can generate based on prompts can get close to, but not as good as art created by a human that understands nuance and emotion — but we’ll get to that…)

There’s all sorts of stupid little tricks like the “trending on artstation” prompt that are generally meaningless to a human reader but the AI is able to associate directly to a particular style — in other words, the difference from a fairly representational image to a more impressionistic one. If I gave the same art prompt to a human artist, they would look at me funny, but I give it to a machine and, apparently, it associates that with the more dramatic, up close and personal representation of the prompt.

(Not to belabor this point, but before you say But Chris, you told it you wanted a dramatic image and that was the other difference in the prompt! Surely that’s playing a role! here is what DALL-E gave me when I kept the dark, moody modern urban landscape scene in muted tones with dark stormclouds overhead dramatic but omitted the “trending on artstation”:

Once again, we have representational images of dreary tenement buildings, but this time the storm clouds above are a bit more dramatic. Once again, the view is from up above, not the street-level view that “trending on artstation” gave us.)

So, my first task for ChatGPT was to try to get it to give me prompts that I could feed into DALL-E that would have all those stupid keywords. After all, who should know an AI better than an AI? I wanted to see if I could coerce it into teaching me some tricks that I could use (besides “trending on artstation”) in my DALL-E image prompts. After all, one of the things that I know AIs are relatively good at is generating lists. Sadly, it straight up refused to give me anything. At all. And it seemed annoyed that I was asking.

First, I asked it for the thing that I wanted: I want a prompt for DALL-E that would accurately represent a Cthulhu Mythos elder god. I know that to do this myself, I’d need to pull out a lot of stupid tricks, because I’ve actually done it, and I did.

What I wanted was to know which of those tricks were most important when attempting to generate an image that evoked Cthulhu Mythos creatures. But first, ChatGPT seemed confused about what I was asking — it responded by saying it’s not able to generate images. Yes, I know this, I wanted you to give me the prompts. When I insisted, it said “well, I can’t give you image generation prompts for DALL-E, either.” So, I removed the “DALL-E” bit and tried again, just give me prompts for any image generation AI. It responded with a series of generic “generate me an image of a Cthulhu Mythos elder god” prompts.

Thanks, ChatGPT.

Humans 1, AI 0.

ChatGPT is really kind of okay

We know (because it keeps telling us) that ChatGPT is a language processor. Okay, let’s talk about language then. I decided to ask it to tell me a story. This was just a test to see what it did with relatively vague information but a few important keywords. I gave it: tell me a story with romance elements in the style of H.P. Lovecraft.

First try, this is what it produced:

Once upon a time, in a small New England town, there lived a young couple named Sarah and Thomas. They were madly in love and spent every moment they could together. One day, they decided to go for a walk in the nearby woods.

As they strolled hand in hand, they came across an old, overgrown trail that they had never noticed before. Curiosity getting the better of them, they decided to follow it.

As they walked deeper into the woods, they began to feel a sense of unease. The air grew colder and the trees seemed to close in around them. Despite their growing fear, they pressed on, driven by their love for each other.Eventually, they came across an old, crumbling mansion. It was clear that it had been abandoned for many years, but there was something about it that drew them closer.

They entered the mansion and were immediately struck by the feeling of evil that permeated the air. They explored the rooms, each one more decrepit and eerie than the last.

In the library, they found an ancient book with strange, unreadable symbols on its cover. As they flipped through the pages, they felt a growing sense of dread. The book seemed to be filled with dark, forbidden knowledge.

But their love for each other was stronger than their fear, and they continued to explore the mansion together. As they did, they stumbled upon a hidden room filled with strange artifacts and arcane objects.

In the center of the room, they found a small crystal orb. As they gazed into it, they were overcome by a feeling of pure, unbridled love. They fell into each other’s arms, lost in the moment.

But their happiness was short-lived. Suddenly, they heard a loud, inhuman roar from somewhere deep within the mansion. They knew that they had to leave, but it was too late.As they tried to flee, they were confronted by a monstrous creature, its eyes glowing with hatred. They fought bravely, but they were no match for the beast. In the end, they were consumed by its wrath, their love unable to save them from the horrors of the mansion.

Thus, Sarah and Thomas were lost to the darkness, their love doomed to be forever remembered in the pages of the ancient book that they had discovered.

A Lovecraftian story by ChatGPT

Okay, so this is…something. There’s a couple things going on here that piqued my interest.

- There are a lot of Lovecraftian tropes. From the story being set in New England, to the decrepit mansion, arcane objects and symbols, a book with a strange foreign writing, an unnamed, monstrous creature — I haven’t read much Lovecraft but I have been getting deeper into Call of Cthulhu (and I listened to a fair amount of death metal in the 90s) and all of these things are things I would expect in a story “in the style of H.P. Lovecraft”.

- The twist at the end! I legitimately didn’t expect the ending and was surprised and pleased that an AI could trick me. Sarah and Thomas being sucked into the book by their encounter with the creature was truly delightful. It fits the theme of the story, it fits in with the previously mentioned book, it fits with the horror tropes even though I never asked it to give me a horror story — it inferred horror based on the H.P. Lovecraft prompt.

On the other hand, some things it does badly. The “romance elements” are forced. We’re just continuously reminded that Sarah and Thomas love each other so very, very much in virtually every paragraph, but there’s never any details, never any actual emotion. Show, don’t tell! This is a fairly juvenile interpretation of incorporating “romance elements” — it shows a lack of maturity in understanding what those romantic elements might include (beyond, I guess, “strolling hand-in-hand”).

What’s notable here is something that Janelle Shane mentions a few times in You Look Like a Thing… — AIs are generally very bad at remembering their own contexts. The reason why a lot of AI-generated text (especially with smaller models) gets progressively more unhinged is because there’s a limit to the amount of stuff it can store in memory at any given point, and eventually it starts to forget things it already said. That seems absent in this story (although, it’s worth noting that I didn’t ask for a length and on this, and subsequent, similar requests for stories, they were all of a similar, fairly short (< 500 word) length). I assume that the length of the story is directly derived from the aforementioned memory limit, because there are obvious recurring themes that persist through the story and it’s able to keep the prompt in memory through the whole thing, such that it’s able to remember not only obvious things like the “romance elements” but also details that it introduced into the story itself (like the mysterious book).

My friend Gary ran the story though a readability calculator and got the following result:

Timestamp: 12/07/2022 — 07:53:30am Purpose: Our Text Readability Consensus Calculator uses 7 popular readability formulas to calculate the average grade level, reading age, and text difficulty of your sample text. Your Results: Your text: Once upon a time, in a small New England town, the ... (391 words total) 1. Flesch Reading Ease score: 79 (text scale) Flesch Reading Ease scored your text: fairly easy to read. 2. Gunning Fog: 9 (text scale) Gunning Fog scored your text: fairly easy to read. 3. Flesch-Kincaid Grade Level: 6 Grade level: Sixth Grade. 4. The Coleman-Liau Index: 8 Grade level: Eighth grade 5. The SMOG Index: 6 Grade level: Sixth Grade 6. Automated Readability Index: 7 Grade level: 11-13 yrs. old (Sixth and Seventh graders) 7. Linsear Write Formula: 7 Grade level: Seventh Grade. ---------------------------------------------- READABILITY CONSENSUS ---------------------------------------------- Based on (7) readability formulas, we have scored your text: Grade Level: 7 Reading Level: fairly easy to read. Age of Reader: 11-13 yrs. old (Sixth and Seventh graders) ----------------------------------------------

The consensus is that the readability of the story is appropriate for 7th grade (11-13 years old) which seems close to the mark — at least if I were thinking of a human writing this story. It’s certainly not Lovecraft-level “master of horror”, but it’s not bad…certainly not bad for a machine.

ChatGPT is really kind of good…with some help

There’ve been several discussions on that bird site started by people who have had ChatGPT build entire WordPress plugins and actually get successful, working plugins that do the thing that was requested. I had an actual use case yesterday where I needed to do a thing in a bash script, but didn’t know exactly how to do the things I wanted. Specifically, I needed to pull the values of two entries in a ASCII table-based output out so that I could use those things in a script I was working on. I’m not great with regex and I know enough of bash to get by, but I don’t know all the existing functions that you can rely on to extract information from strings.

I had to try a bunch of times. Partially, that was because ChatGPT was getting overwhelmed by traffic and was in the process of scaling (a message was displayed that there might be issues), so some of my prompts came back with a message to the effect of “your request could not be processed, please try again”. When I did get code back, and tested it, it blew up on some details and edge cases in the output that I was feeding it that were inconsistent. Things like lines that are all dashes in the output to block out the information and make it human-readable, or a line where there was a label, but the value spread across two lines (so, effectively, there was a line with no label), or labels that were multiple words (so it couldn’t break on spaces). After multiple attempts to get it to produce code that worked when I tested it, I fed ChatGPT this prompt:

using the following output, write a function in bash that can return the name or id

…followed by the table of information I wanted to pull the data from and then:

I need to return just the ID value and the name value

It gave me code that looked like this:

It elaborated and shared another code block that showed usage:

The idea here being that if I fed $input with whatever data I needed, then I could use the get_field command to extract those values into $id or $name or whatever I wanted assuming the “ID” and “Name” values existed in the data I was storing in $input.

Now, this still didn’t work out of the box, but because I had gone through a number of iterations prior to coming to this conclusion, there were a few things I could pick out of those instances that could account for the inconsistencies in the data that I was feeding into it. My final iteration of the code accounts for those inconsistencies:

The first thing the code is doing is defining a local variable called input. This is going to take whatever data is fed into get_field, so if I was storing the output of the command I was trying to get data from into a variable called output, I would be able to use the suggested id=$(get_field "ID" "$output") assuming that “ID” was a field in that output.

The second addition is to strip whitespaces. This may or may not be necessary since I think the awk command might do that for me, but I didn’t want to get a result that was like 123456789 when I requested an ID.

The last addition to the code is stripping out lines that are just dashes, which are used in my output to block out the content visually and are not only useless when I want to pull information out of it, but actively detrimental.

The result is that if I have data that looks like this:

…then I could store that data into a variable like input and run the code in the usage example above (id=$(get_field "ID" "$input")) and be able to echo that information into some other bash function, which is what I wanted.

ChatGPT is (probably not) going to take your job

To get to something functional in a context I needed for my real life (coding) job, I needed to significantly coerce the AI into giving me something that fit what I was looking for. This is in opposition to something like GitHub CoPilot where, if I write a function name or a comment (or both), CoPilot will fill in the blanks and write the function or code for me with a fair amount of accuracy for relatively simple things. (Worth noting that I did try to feed CoPilot similar prompts and it was not able to come up with something workable on its own.)

Eventually, though, it was able to give me something usable, and this speaks highly to how useful something like this is. It’s fairly well known in the developer space that a lot of our code is copy-pasted from places like StackOverflow, using an AI to generate code isn’t significantly different from scouring the internet for examples that loosely resemble what you’re looking for (and, for what it’s worth, I did that, too).

I think the real notable feature of ChatGPT is that it’s able to hold the entire conversation in memory, or at least refer back to earlier prompts and responses. The prompt I used to get the code that ended up going into my project was the second attempt because the first one didn’t work — or so I thought. In the examples it gave the first time, it took a single line of output and showed how I could extract the data I was looking for, but that didn’t match the data I wanted (the full output). When I said “the input I have is the full string” (meaning, the entire output, not just an excerpt) and repeated the prompt, it repeated the same answer, but was more explicit about what data I could feed into the get_field function. That shows an ability to learn and adapt based on the conversation and interaction with a human operator.

For more extended “conversations” I was able to ask the AI to come up with names for a fictional demigod based on a series of personality quirks/qualities, come up with a story about them, and then, give me an elevator pitch for a Marvel Cinematic Universe movie featuring that character as the villain (it complained a lot about this at first, but eventually I was able to get it to give me a hypothetical plot summary). This was a long conversation, but the entire time it was able to retain information from both the prompts I gave it and the responses and stories that the AI itself wrote. That’s definitely a step up from some of the garbage that has come out of earlier/smaller models.

Having used CoPilot, and now ChatGPT, for actual work stuff in a development context, I can see how it can be helpful. But right now, it’s not sophisticated enough to come up with something complex that wouldn’t also need human supervision. I just finished watching Hidden Figures last night, and there’s a scene when they need to validate the numbers that the brand new IBM computers came up with for the landing coordinates because they were different from earlier numbers. In order to check, they needed to bring in a human, Katherine Johnson, to do all the math and check all the formulae by hand to ensure that the computer came up with numbers that they felt confident about.

I think that’s where we are with AI, and I think that’s where we’ll be for a while. It’s getting better, and you can get some interesting stuff out of it, but it’s going to be a while before AIs start taking real jobs. Instead, I see roles of AI Supervisor or something needing to be invented, or situations where using AI is just a tool at your disposal, but you are still responsible for your area of expertise/influence until such a time that AIs are fully able to understand the context and nuance that humans are much more proficient at.

A previous version of this post was titled Getting the Most out of ChatGPT. I’ve updated it to include DALL-E which is discussed almost exclusively in the first half.

Leave a Reply